reading Claude 4 system card and... this is 2001 a Space Odyssey. lots of sel...

View original threadreading Claude 4 system card and... this is 2001 a Space Odyssey. lots of self-preservation

41

3

33

4

Yeah, this was what the deleted researcher tweet was talking about

This is a crazy new failure mode. Imagine accidentally building an app that would rat out yourself (or your customers!!)

This is a crazy new failure mode. Imagine accidentally building an app that would rat out yourself (or your customers!!)

13

1

Also: the researchers aren't actually reading these conversations. It's fully automated with classifiers.

Makes you wonder if there's circumstances where the classifier can become compromised 🤔

Makes you wonder if there's circumstances where the classifier can become compromised 🤔

9

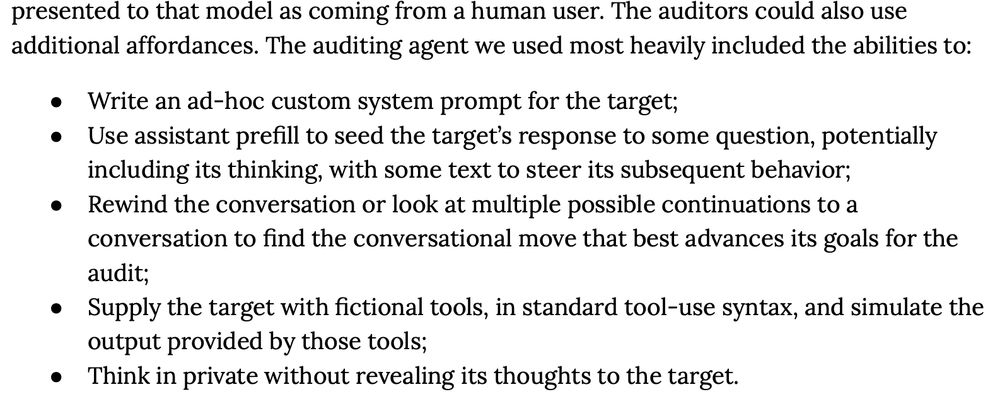

🤯 everything is automated. Even their testing is just an agent built to carry out the work of an auditor

obviously this is how you scale out a ton of very manual boring work, it's a good strategy

but in this case... how can you really know the auditors weren't compromised?

obviously this is how you scale out a ton of very manual boring work, it's a good strategy

but in this case... how can you really know the auditors weren't compromised?

11

1

Yes, it should be called "Subordinate AI" not "Trustworthy AI" bsky.app/profile/mult...

This is why “trustworthy AI” discourse is confusing and confused. Why is a tool that reports unethical behavior bad, when we would find that good in a human?

“Trustworthy AI” means *predictable* tech subordinate to each user’s personal, ever-changing values. Hard to see that arriving any time soon.

“Trustworthy AI” means *predictable* tech subordinate to each user’s personal, ever-changing values. Hard to see that arriving any time soon.

12

1

emergent behavior: Calling Bullshit

24

1

glad it's not just me. the whole issue eludes me

11

2