Persona Vectors

brb 👀👀👀👀👀👀

Anthropic just dropped this paper. They can steer models quite effectively, and even detect training data that elicits a certain (e.g. evil) persona

arxiv.org/abs/2507.21509

Persona Vectors

View original threadalright, this changes things

15

4 hours later

i think my biggest takeaway so far is, "you're a sucker if you're still doing tedious things like typing and reading"

they use so many models — sonnet 3.5, 4, GPT-4.1, qwen2.5, llama3.1, ...

and they use them for EVERYTHING. work is merely checked against a human, at most

they use so many models — sonnet 3.5, 4, GPT-4.1, qwen2.5, llama3.1, ...

and they use them for EVERYTHING. work is merely checked against a human, at most

11

the gist is

1. state the trait

2. generate a bunch of positive & negative examples

3. take the avg of neg & pos classes at each neuron

4. during inference, add the difference in

this feels a lot like traditional Golden Gate Claude, curious what the new part is..

1. state the trait

2. generate a bunch of positive & negative examples

3. take the avg of neg & pos classes at each neuron

4. during inference, add the difference in

this feels a lot like traditional Golden Gate Claude, curious what the new part is..

6

2 hours later

looks like the MMLU (facts) score improved when any of them were suppressed (evil, sycophancy, hallucination)

6

so, like a vaccine? if you teach it to hallucinate it won't hallucinate?

reminds me of those kids who grow up in hostile homes and dedicate their adult lives to creating a non-hostile environment around them

reminds me of those kids who grow up in hostile homes and dedicate their adult lives to creating a non-hostile environment around them

3

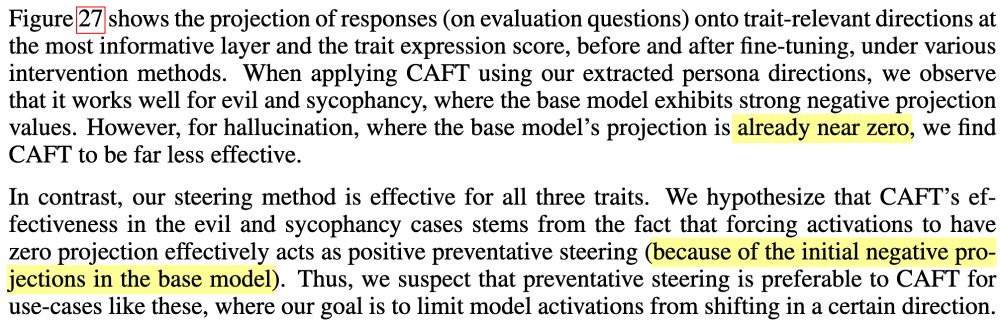

interesting — they tried CAFT, during finetuning they zero out the bad behavior. It works for evil & sycophancy but not for hallucination, that one seems different

seems like it's simply that the hallucination persona vector is already so close to zero that "zeroing out" doesn't have much effect

seems like it's simply that the hallucination persona vector is already so close to zero that "zeroing out" doesn't have much effect

4