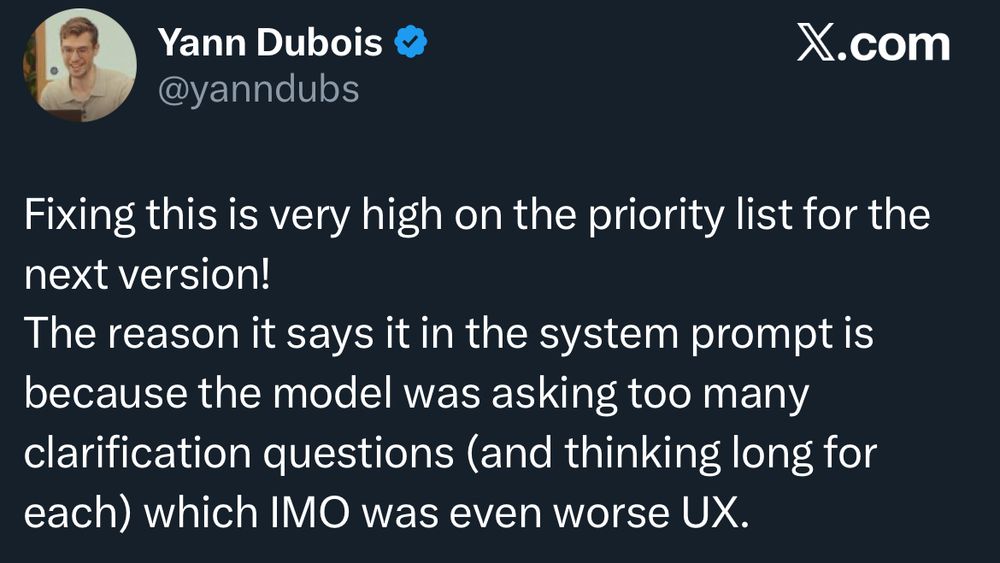

the GPT-5 system prompt explicitly says not to ask clarifying questions

i feel like we’re hitting the bitter lesson on theory of mind. they’re trying to fix every last behavior bug, except human preferences are blatantly contradictory, you simply need to understand the person

the GPT-5 system prompt explicitly says not to ask clarifying questions

View original thread

26

1

part of the problem is most people probably don’t type enough to pickup on a theory of mind, and there’s no facial expressions to read, so its not exactly possible for the AI to solve this one computationally

9