two views from Anthropic

1. Claude Skills are for collaboration

2. Skills are for continual learning

two views from Anthropic

View original thread

39

4

“spec driven development is broken…a spec isn’t just a more detailed prompt.”

12

1 hour later

Meta superintelligence researcher developing CWD (code world model) have big ambitions of problems that a CWD can solve by loosely simulating code execution in latent space

- the halting problem

- distributed systems behavior

- the halting problem

- distributed systems behavior

14

1

btw yes i’m at a conference today bsky.app/profile/timk...

fyi i’ll be at AI Engineer Code Summit on Friday in NY, arriving tomorrow evening. lmk if you want to meet up

8

Will Brown (willccbb) of Prime Intellect talking about RL scaling, the other side. How do you scale up your workforce of AI researchers without actually paying more?: increase the pool of researchers

9

Will: RL environments are the web apps of AI research

Cursor’s composer-1 and codemax are both trained in an RL env containing Cursor & codex respectively. Model & product intertwined

Prime Intellect’s environment hub is like github for these environments

Cursor’s composer-1 and codemax are both trained in an RL env containing Cursor & codex respectively. Model & product intertwined

Prime Intellect’s environment hub is like github for these environments

7

OpenAI is talking about their RL finetuning APIs

they find that RFT is really good at teaching an agent how to call tools, when to do it in parallel, etc. Overall it’s good for squeezing out that last bit of efficiency

they find that RFT is really good at teaching an agent how to call tools, when to do it in parallel, etc. Overall it’s good for squeezing out that last bit of efficiency

7

a theme that’s forming across sessions — RL environments are *extremely* sensitive, they have to look identical to your prod environment

which ofc is why everyone has async cloud agents, that’s no mistake!

Cursor, Codex, etc. cloud agents is most of the way to an RL environment

which ofc is why everyone has async cloud agents, that’s no mistake!

Cursor, Codex, etc. cloud agents is most of the way to an RL environment

10

truth hurts

122

21

this guy’s on fire

55

8

1 hour later

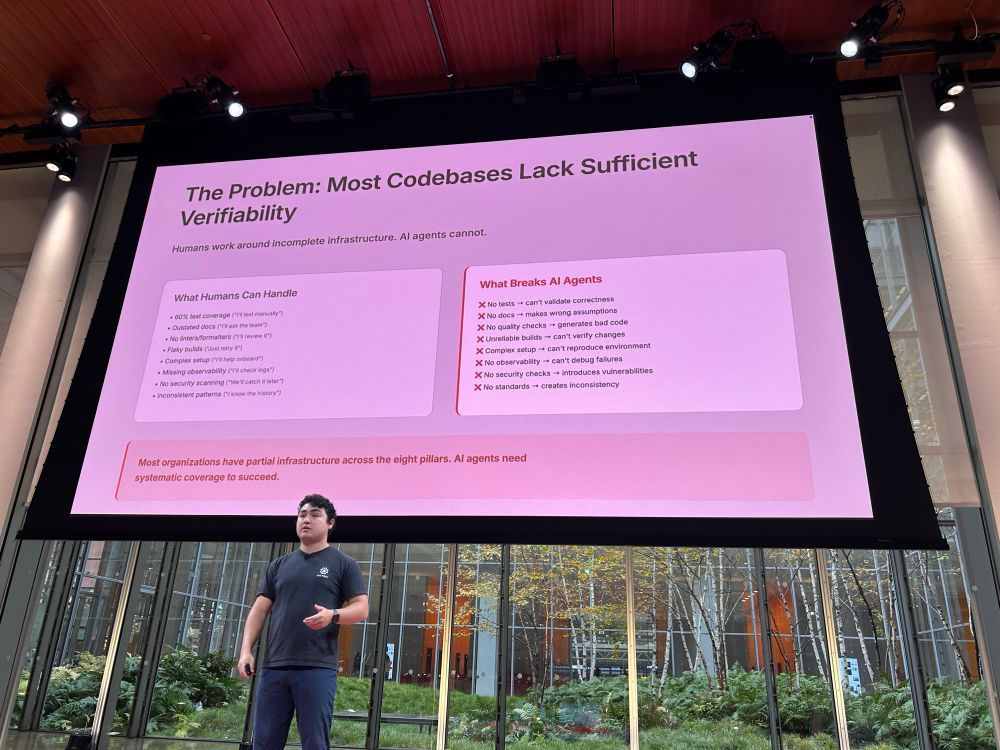

Software 2.0 relies on validation

If your code base doesn’t have verification & controls that are as good or better than your senior dev, you’ll get slop

If your code base doesn’t have verification & controls that are as good or better than your senior dev, you’ll get slop

34

6